I have been following the development of this component of AI/machine learning for years now. I feel, it has evolved beyond expectations, but still not much awareness (to create a balance). I like to share a little about “deepfake” fast-evolving dangerous component of AI.

Introduction

Deepfakes swap faces (& voice, impressions) with anyone. Deepfake’s have gathered widespread attention for their uses in celebrity pornographic videos, revenge porn, fake news, hoaxes, and financial fraud. But if this puts words in politicians’ mouths, it could do a lot worse (which is happening not only on comedy front at the moment).

Not to forget Shallow Fake (they are low tech videos/audio manipulation techniques) video controversy surrounding the doctored video of President Trump’s confrontation with CNN reporter Jim Acosta at a November press conference.

Security experts globally are concerned about the future of this technology. Currently, the government and industry working together to restrict the use and bring legislation. The US and China made deepfakes illegal, outlawing the creation or distribution of doctored videos, images or audio of politicians within 60 days of an election. I guess other countries will follow.

Very few people know the impressive (& dangerous in wrong hands) tech behind them. I will share a high level for everyone’s understanding.

So, what really is deepfake?

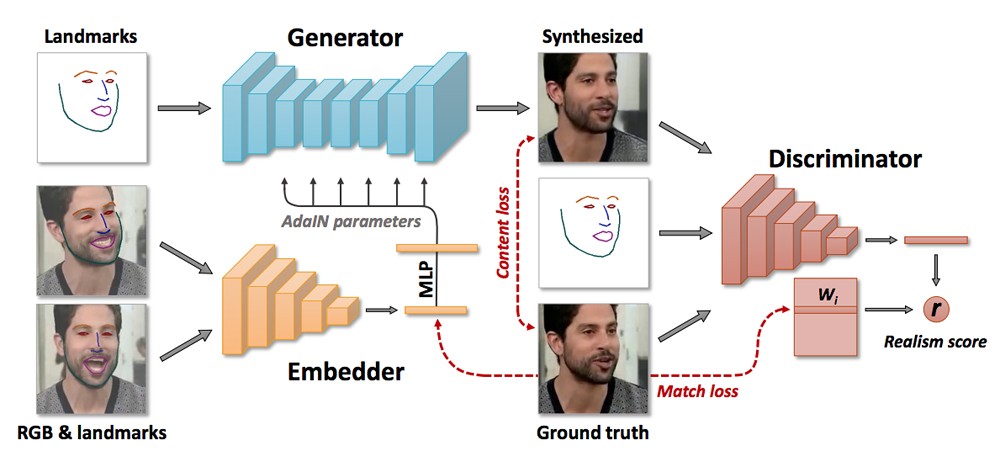

Deepfake (also spelled deep fake) is a type of artificial intelligence used to create convincing images, audio, and video hoaxes. Deepfake content is created by using two competing AI algorithms, one is called the Generator and the other is called the Discriminator.

Together, the generator and discriminator form something called a Generative Adversarial Network (GANs). Each time the discriminator accurately identifies content as being fabricated, it provides the generator with valuable information about how to improve the next deepfake.

Below architecture, flow explains the journey.

Good explanation on how deepfake works (video below):

Special thanks to Egor Zakharov for the video and clarity (above diagram), read his paper for deeper technical understanding (very thorough), link to the paper: https://arxiv.org/pdf/1905.08233.pdf

Also if you wish to read more about GANs: https://en.wikipedia.org/wiki/Generative_adversarial_network

The amount of deepfake content online is growing at a rapid rate. At the beginning of 2019, there were 7,964 deepfake videos online, according to a report from startup Deeptrace; just nine months later, that figure had jumped to 14,678. I will share trends (charts) and some deep insight in my later posts.

An effective example of Shallow Fakes followed by Deepfakes (video below):

Let’s get back to the Deepfake world (below an interesting clip).

Experiment:

I thought: I will give deepfake a go, by using some apps available today. First, I started with FaceApp this felt like Snapchat filters (was ok), they had pro version – which I didn’t try. Then I tried Doublicat App made by RefaceAI an Ukarinanin company (low tech but good) – this was scary. How easy was it for me to create deepfake (or shallow fake).

I used my picture (1 selfie/frame) to create a deepfake of “Rock” and results are very good (scary part is it took a few seconds to render this image into this video). Imagine if I use 8 or 16 framesets or a full video of myself!!

Well, the last picture/frame for this video is “real me”!

The term deepfakes originated around the end of 2017 from a Reddit user named “deepfakes”.He, as well as others in the Reddit community r/deepv fakes, shared deepfakes they created; many videos involved celebrities’ faces swapped onto the bodies of actresses in pornographic videos, while non-pornographic content included many videos with actor Nicolas Cage’s face swapped into various movies.

In January 2018, a proprietary desktop application called FakeApp was launched. This app allows users to easily create and share videos with their faces swapped with each other. As of 2019, FakeApp has been superseded by open-source alternatives such as Faceswap and the command line-based DeepFaceLab. (There are many apps all around the globe).

“In January 2019, deepfakes were buggy and flickery,” said Hany Farid, a UC Berkeley professor, and deepfake expert. “Nine months later, I’ve never seen anything like how fast they’re going. This is the tip of the iceberg.”

In March 2020, we have seen videos with close to 95% accuracy.

Is deepfake a threat?

Since videos deepfakes are still in progressive development mode, from my perspective audio deepfake is the biggest threat at present (not known by many security experts but governments are warning cyber experts) and for coming years. Implications of this can be far more dangerous than perceived.

Imagine if deepfake AI called you on your phone, pretending to be your loved one? what will you not do? and there is no question of not trusting or verifying (many scenarios).

Audio deepfakes have been used as part of social engineering scams, fooling people into thinking they are receiving instructions from a trusted individual.

In 2019, a U.K.-based energy firm’s CEO was scammed over the phone when he was ordered to transfer €220,000 into a Hungarian bank account by an individual who used audio deepfake technology to impersonate the voice of the firm’s parent company’s chief executive.

Another worry is producing audio or video evidence in the court of law? Again, how the evidence be of any value going forward in a court of law (or happening now?)?. Another bigger discussion for the future with world governments.

Until recently, video content has been more difficult to alter in any substantial way. Because deepfakes are created through AI, however, they don’t require the considerable skill that it would take to create a realistic video otherwise. Unfortunately, this means that just about anyone can create a deepfake to promote their chosen agenda. One danger is that people will take such videos at face value; another is that people will stop trusting in the validity of any video content at all.

On the good side, this tech on the lower level is always used by the film industry in post-production and is now using the latest techniques to bring deceased actors back to life’ for film sequels (a process known as ‘digital resurrection’).

What’s on the legal front?

In the year 2018/2019 many laws and Acts are passed in the United States to make deepfake a crime (varied as identity theft, cyberstalking, and revenge porn). In the United Kingdom, producers of deepfake material can be prosecuted for harassment, but there are calls to make deepfake a specific crime.

EU countries and many other nations are seeing this as a critical threat and working towards the restrictions of use for the same.

Detection :

Experts continue to disagree on whether media forensics is capable of screening out deepfake’s. Also, There has been insufficient research on how deepfakes influence the behaviour and beliefs of viewers.

Governments, universities, and tech firms are all funding research to detect deepfakes. In Dec 2019, the first Deepfake Detection Challenge kicked off, backed by Microsoft, Facebook, and Amazon. It will include research teams around the globe competing for supremacy in the deepfake detection game (with a total of $1 million in the prize money). Results are still to come.

Solution?

Technology leaders are pointing AI will be able to detect the fakes. In my view: IA created deepfake (or vice versa) and AI will detect this!! doesn’t sound right.

Awareness :

Malicious use, such as fake news, will be even more harmful if nothing is done to spread awareness of deepfake technology.

In all my technology conferences with leaders, I emphasized the importance of awarenes and training. The only possible weapon against threats (its a big discussion, I will leave it for my future articles).

Only human awareness and training can become a means of understanding and fighting this threat. Like other internet things: website, IoT then apps, identifying spam and phishing, etc. its a journey.

I will not be surprised if youtube/vimeo, facebook, google, and all major cloud providers will have auto detection tools for the blocking. well, that’s 2 years from now.

Happy to answer and learn, if you have any questions, comments, or suggestions.

Thank you for reading.

References:

Egor Zakharov: https://www.youtube.com/watch?v=p1b5aiTrGzY&t=97s

Rössler, A. et al. (2019) FaceForensics++: Learning to Detect Manipulated Face Images. Available here: https://arxiv.org/abs/1901.08971

Deeptrace: https://deeptracelabs.com/mapping-the-deepfake-landscape/

Statement for the record 2019 worldwide threat assessment of the U.S. intelligence community: https://www.odni.gov/files/ODNI/documents/2019-ATA-SFR—SSCI.pdf

DeepTraceLabs: https://deeptracelabs.com/mapping-the-deepfake-landscape/

Big thank you to all collaborators.